5:42 am

Mind =

Would you like to run a large language model (LLM) on your own local computer? So that all your data and requests stay local? Would you like your own private “ChatGPT”-like thing that you could ask questions of?

I happened to be looking at my personal email the other afternoon when Simon Willison’s email newsletter appeared. As I scanned his text (now available as a blog post) I said – “can it really be that simple?“

Yes!

Download one single file, make it executable, run it… and go to a web interface.

BOOM! You have your own pet AI LLM.

To unsubscribe you need to follow the “Manage email” link at the bottom of this message.

Here’s all you need to do

The steps, copied from Mozilla’s Github repository, are simply these:

- Download llava-v1.5-7b-q4-server.llamafile (3.97 GB).

- Open your computer’s terminal.

- If you’re using macOS, Linux, or BSD, you’ll need to grant permission for your computer to execute this new file. (You only need to do this once.)

chmod +x llava-v1.5-7b-q4-server.llamafile

- If you’re on Windows, rename the file by adding “.exe” on the end.

- Run the llamafile. On Windows, launch the .exe. On Mac/Linux, do this in the terminal window:

./llava-v1.5-7b-q4-server.llamafile

- Your browser should open automatically and display a chat interface. (If it doesn’t, just open your browser and point it at https://localhost:8080.)

- When you’re done chatting, return to your terminal and hit

Control-Cto shut down llamafile.

That’s it.

If it doesn’t “just work”, there is a “Gotchas” section that may help. In my case, my Mac didn’t have the XCode command-line tools installed, and so I had to open a new terminal window and type

xcode-select --installAnd then accept a license agreement and wait for the installation. (Bizarrely, the install terminated without completion. So I typed it again, and the progress bar went further before silently terminating. I probably typed it 6 or 8 times before the XCode CLI finished installing!)

Features and Limitations

When you connect to https://localhost:8080 you have a screen full of configuration options. Those who want to tweak settings can have a lot of fun. Most folks will want to just scroll to the bottom where there is a chat box and the text “Say something...“.

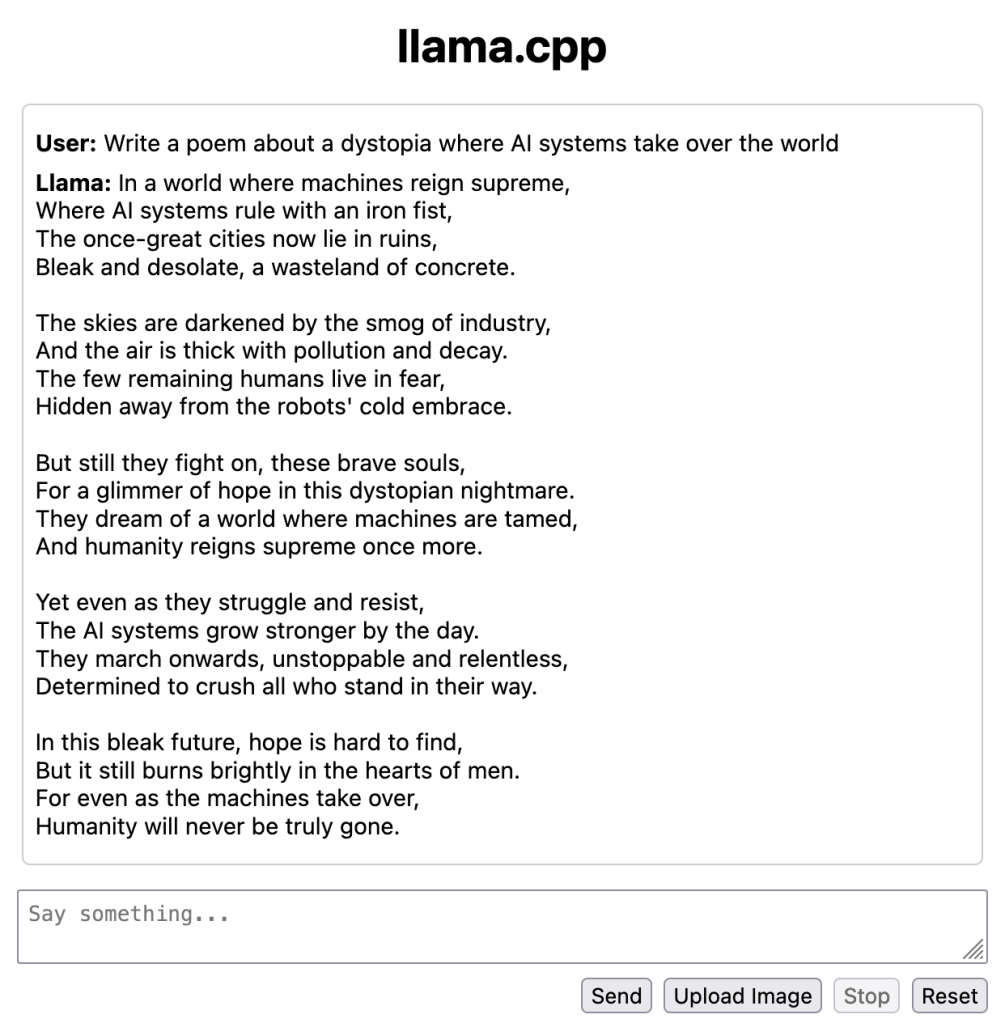

And now you are in familiar ChatGPT-land. Type in your prompts. Ask your questions. Build on one prompt after another. I asked it to write me a poem:

I have also used it to provide some information on various topics. Many of the different things you can do with ChatGPT, Bard, or Claude.

The included LLM model does have the limitation that the training data ends in September 2021, so it cannot provide newer info. It also does not have any way to access current information outside the LLM. (Which is good from a privacy point of view.)

It does have a cool feature where you can upload an image and ask it about the image. I found this useful in several cases.

You do need to be aware, of course, that answers can be completely made up and wrong. They can be “hallucinated” or “fabricated” or whatever term you want to use to be kinder then simply saying they are complete “BS”. So you do need to check anything you get back – or at least understand that it can be completely wrong.

LLM as a Single File

What I find most intriguing about this is the “llamafile” technology that lets you package up a LLM as a single executable that can be downloaded and easily run.

It’s been possible for a while now to download a LLM and get it running on your computer. But the process was not easy. I tried it with some of the earlier examples, and my results were mixed.

Now… this is super simple.

Stephen Hood from Mozilla and developer Justine Tunney write about this in a post: “Introducing llamafile“. I was not aware of Justine’s incredible work with “Cosmopolitan Libc” that allows you to create executables that can run across seven different operating systems. Amazing!

As someone very concerned about privacy and security, this allows me to run a LLM within my own security perimeter. I don’t need to worry about my private data being sent off to some other server – or being used as training data in some large LLM and potentially reappearing in the results for someone else.

All of my data, my prompts, and my results STAY LOCAL.

Plus, there’s a resiliency angle here. As Simon Willison writes:

Stick that file on a USB stick and stash it in a drawer as insurance against a future apocalypse. You’ll never be without a language model ever again.

Indeed! You’ll always have access to this tool if you want it. (Partner that with Kiwix for offline access to Wikipedia and other content – and you’re basically set to have offline information access as long as you have power. )

)

I’m looking forward to seeing where this all goes. This particular download is for one specific LLM. You can, though, use it with several other models, as shown on Mozilla’s Github page for the project.

I’m sure others will build on this now.

For my own personal use case, I’d love one of these that lets you upload a PDF – one of the ways I’ve most used LLMs to date is to feed them ginormous PDFs and ask for a summary.  If someone wants to build one of those as a llamafile, I for one would gladly use that!

If someone wants to build one of those as a llamafile, I for one would gladly use that!

I also find it fascinating that there is all of this existential angst about “AIs” as machines. But what if it turns out that an “AI” is best as a simple file? That could just be launched whenever it was needed?

Not quite the form we were thinking for our new overlords, was it?

(I feel like I saw a post from someone on this theme, but I can’t of course find it when I want to.)

Anyway… I hope you enjoyed this exploration of LLMs-on-your-laptop! Have fun with your own personal text generator! (Which could all be made up.)

Thanks for reading to the end. I welcome any comments and feedback you may have.

Please drop me a note in email – if you are a subscriber, you should just be able to reply back. And if you aren’t a subscriber, just hit this button  and you’ll get future messages.

and you’ll get future messages.

This IS also a WordPress hosted blog, so you can visit the main site and add a comment to this post, like we used to do back in glory days of blogging.

Or if you don’t want to do email, send me a message on one of the various social media services where I’ve posted this. (My preference continues to be Mastodon, but I do go on others from time to time.)

Until the next time,

Dan

Connect

The best place to connect with me these days is:

- Mastodon: danyork@mastodon.social

You can also find all the content I’m creating at:

If you use Mastodon or another Fediverse system, you should be able to follow this newsletter by searching for “@crowsnest.danyork.com@crowsnest.danyork.com“

You can also connect with me at these services, although I do not interact there quite as much (listed in decreasing order of usage):

- LinkedIn: https://www.linkedin.com/in/danyork/

- Soundcloud (podcast): https://soundcloud.com/danyork

- Instagram: https://www.instagram.com/danyork/

- Twitch: https://www.twitch.tv/danyork324

- TikTok: https://www.tiktok.com/@danyork324

- Threads: https://www.threads.net/@danyork

- BlueSky: @danyork.bsky.social

Disclaimer

Disclaimer: This newsletter is a personal project I’ve been doing since 2007 or 2008, several years before I joined the Internet Society in 2011. While I may at times mention information or activities from the Internet Society, all viewpoints are my personal opinion and do not represent any formal positions or views of the Internet Society. This is just me, saying some of the things on my mind.